PyTorch 基本概念和用法

PyTorch 是基于 Python 的科学计算包,官网给它自己的定义是:

- A replacement for NumPy to use the power of GPUs

- A deep learning research platform that provides maximum flexibility and speed

可以看出,PyTorch 提供了一种类似 Numpy 的抽象方法来表征张量(或多维数组),它还能利用 GPU 来提升性能。

基础数据结构

PyTorch 的关键数据结构是张量 (Tensors),即多维数组。其功能与 NumPy 的 ndarray 对象类似,不同的是它可以在GPU上使用。

- 张量的基本操作可见于这里

- 张量和Numpy的互相转换:

1 | # from tensor to ndarray |

值得注意的是,Torch Tensor 和 NumPy array 其实是共享内存位置的,因此改变一个另一个也会因此改变。

- 张量使用GPU加速计算

1 | # Tensors can be moved onto GPU using the .cuda method. |

自动微分库

自动微分库autograd是 PyTorch 用于所有神经网络计算的关键库,它提供了所有关于张量的微分操作。Autograd 是基于动态框架的,这意味着反向传播根据代码运行状态而改变,每次迭代过程中都可能不同。

autograd.Variable 是这个包中最重要的类。它:

- 是张量的简单封装

- 记录了所有历史操作

- 将梯度保存在 .grad 中

- 可以帮助建立计算图

每次结束计算后,使用.backward()可以自动计算出所有梯度值:

1 | from torch.autograd import Variable |

神经网络

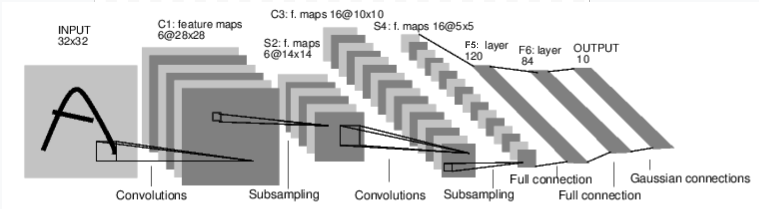

torch.nn包可以用来构建神经网络。一个nn.Module包含了layers和用于返回output的forward(input) 方法。

例如这个简单的前馈神经网络:

一般典型的神经网络训练步骤如下:

- 定义带学习参数 (learnable parameters or weights) 的神经网络;

- 输入数据集进行迭代计算;

- 使用神经网络处理输入;

- 计算损失;

- 将梯度返回到网络的参数中;

- 更新参数。

定义神经网络

定义一个神经网络只需要定义其:

- 层结构 (Layers)

- 前馈函数 (Forward function)

1 | """ |

损失函数、反向传播与参数(权重)更新

nn中已经定义了许多损失函数,其中一个简单的是nn.MSELoss,用于计算均方误差。

最简单的权重更新规则是随机梯度下降(Stochastic Gradient Descent, SGD):weight = weight - learning_rate * gradient

同时,torch.optim为更新权重提供了优化算法。

1 | import torch.optim as optim |